Hat tip to Karen L. Myers.

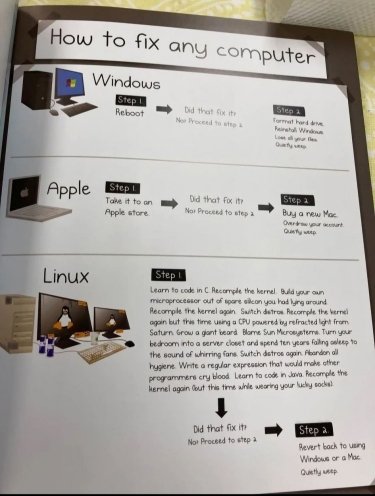

How to Fix Any Personal Computer

Amusement, Apple, Humor, Linux, Microsoft, Operating Systems, Technology

PC Problem Fixed

Blog Administration, Linux, Technical Difficulties, Technology, Virginia, Windows

Happily, my self-inflicted partition disaster proved easy to get fixed.

I concluded that fixing the problem required using the kind of utility programs only PC repair shops have on hand to get in and eliminate that GRUB Linux boot-loader, so I hauled it down to Dok Klaus in Warrenton.

Klaus had it fixed the same day and only charged me for one hour of service.

As PC problems go, it was ultimately minor. Now I have my entire hard drive to play with.

How Dumb Am I?

Blog Administration, GRUB, Linux, Technical Difficulties, Technology, Windows

NYM readers may at least be amused.

It’s like this. I bought a Sony Vaio laptop a good while back. It was a bargain, but it came with Vista installed.

At that particular moment in history, I was feeling experimental. I felt like playing with Linux, and I had a hankering to see if I could possibly adapt to the MAC OS environment, one button mouse, all that. So I got a free copy of Ubuntu and bought a copy of Leopard on Ebay. I had been reading that it was possible to install Leopard on a Vaio with some fiddling.

None of this worked out for me.

Leopard could not relate to the notebook’s videocard, and I simply gave up and installed XP on the second hard drive partition. I wasted hours trying to use Linux, but it was just too much trouble to overcome the absence of a readily available driver for the wireless modem. Linux worked fine. It just could not contact the Internet.

So there I was with 80 gb of my hard drive devoted to a Linux installation I was not actually using. But, hey, I still had about 60 gb with Win XP on it, which was working fine.

But, over time, that 60 gb was beginning to fill up. I trashed the games I wasn’t actively playing and purged several large programs. Then, I started moving all the image files off the PC onto various backup drives. But, finally, I had just installed Lightroom and Visio, and C: was getting close to full again. There were getting to be fewer movable items. I got to thinking last night that I ought to do something about all this.

So I Googled on the phrase “eliminate partition” and, lo and behold, there was a link to a discussion explaining that you could do that by hitting START>Control Panel>Administration Tools>Computer Management>Storage>Disk Management, then all you had to do was right click on the offending 80 gb Linux Partition, and select Delete.

What could possibly go wrong? I thought to myself. Ubuntu goes bye bye. The 80 gb Linux Partition returns to being part of the ordinary C: drive. I have lots of disk, and everyone is happy. So I hit “delete.”

Then I looked at the properties of the C: drive, so I could admire all the great new space I had created.

Hmmm. No change. The only difference was that second partition was now unlabeled.

I guess I need to reboot before the change goes into effect, I concluded. This would be the moment of truth. If I had screwed the pooch, I would soon find out. But, how likely was that?

My keen mind, doubtless impacted by age and senility, had overlooked the obvious consideration that I had installed Ubuntu first, and Ubuntu had put itself in charge of the boot-up process.

So the PC turns off, starts to come up, and GRUB (Ubuntu’s Grand Unified Boot-Loader) starts looking for that now-unlabeled Linux Partition, can’t find it, and sits there… permanently, announcing Error 17.

Error 17 means that GRUB can’t find the partition it’s looking for. It then freezes and sulks.

So, this is how to disable your PC and create a fine opportunity to research sub-operating system levels of PC operation in both Windows and Linux lands.

Blogging will be less frequent for a few days. I’m using an older, slower machine.

Linux: A Cautionary Tale

Humor, Linux, Open Source, Software, Technology, Vista

Since I detest Vista, I’ve started fooling around with Linux on a new laptop. Ubuntu installed easily, but there is this little problem with accessing the Internet.

My wife sent me the following cartoon some weeks ago as a warning, and I’m afraid it already seems to be a very accurate picture of my Linux experience.

Linux Winning in Hollywood

Animation, Film, Hollywood, Linux, Special Effects, Technology

Mac may be humiliating poor old PC in those amusing television commercials, but both of them have been caught napping by the penguin in the high tech world of special effects, Stephen J. Vaughn-Nichols reports at ComputerWorld.

While top animation and FX (special effects) programs are run on Macs and some of them, like RenderMan Pro Server are being ported to Windows, it’s on Linux clusters that the really serious movie and television visual effects are created. As Robin Rowe writes at LinuxMovies.org, “In the film industry, Linux has won. It’s running on practically all servers and desktops used for feature animation and visual effects.”

Rowe’s not just being a Linux booster. It’s the Gospel truth. The animation and FX for Indiana Jones and the Kingdom of the Crystal Skull; Star Wars: The Clone Wars; WALL-E; 300; The Golden Compass; Harry Potter and the Order of the Phoenix; and I Am Legend, to name but a few recent movies, were all created using Pixar’s RenderMan and Autodesk Maya running on Linux clusters.

The really short version for why this is so comes down to Linux clustering enables you to put massive computational firepower into rendering 2D and 3D images. It’s ironic. While getting the most out of NVIDIA and ATI graphic cards on a Linux desktop is still a pain and there’s always some trouble dealing with proprietary video formats on Linux, the top animated and FX-heavy videos usually have their start on Linux systems.

Specifically, most photo-realistic special effects are created with programs using Pixar’s RISpec (RenderMan Interface Specification) compliant programs. RISpec is an extremely detailed open-standard set of APIs (application program interfaces) for 3D graphics rendering programs. To be more precise, RISpec isn’t quite an open standard. While Pixar, the animation giant owned by Disney, has published the specifications for all to use, and no longer even requires a no-charge license to create a RISpec-compliant rendering program, Pixar doesn’t go out of its way to specify exactly how developers can, or can’t use RISpec.

That said, there are open-source RISpec-compliant programs like Pixie and other rendering programs such as Blender, which can be used as a source for RISpec software. Pixar’s RenderMan software suite itself, while it relies on Linux in most animation and FX shops, is unlikely ever to be open-sourced.

So, while you can’t point to animation and special effects software as a major win for open-source software, there is absolutely no doubt that every time you gasp at a breath-taking escape by Indy or grin at a particularly clever visual bit of fun in Ratatouille, you’re appreciating the power of Linux.

Doom for Sysops

Amusement, Doom, Linux, Technology

Shotgunning the processes

Dennis Chao proposes using DOOM as the user interface for System Administration.

What a great idea!

Brittle Software, Antigorai, and Culture

Amazon, Apple, Ebay, Jaron Lanier, Libertarianism, Linux, Microsoft, Open Source, Oracle, Samuel Edward Konkin III, Technology, The Internet, Walmart

Jarod Lanier (above) writes about Technology the way certain of my college friends used to talk about these kinds of things after a couple of hash brownies. This specific (brilliant, crossing the barriers of a variety of separate and distinct topics, wildly original and speculative, and a trifle daft) form of discourse was referred to in our circles as space-ranging. Criticized by his interlocutors for his prolixity, for the profusion of his ideas, for their chaotic disorganization, and for indulging in the characteristic intellectual overreach of the seriously stoned, one Early Concentration Philosophy classmate of mine, had on a particular occasion declared memorably in his own defense: “I am a Space Ranger!”

As the rings of Saturn fade distantly in the view-finder, Lanier remarks:

As it happens, I dislike UNIX and its kin because it is based on the premise that people should interact with computers through a “command line.” First the person does something, usually either by typing or clicking with a pointing device. And then, after an unspecified period of time, the computer does something, and then the cycle is repeated. That is how the Web works, and how everything works these days, because everything is based on those damned Linux servers. Even video games, which have a gloss of continuous movement, are based on an underlying logic that reflects the command line.

Human cognition has been finely tuned in the deep time of evolution for continuous interaction with the world. Demoting the importance of timing is therefore a way of demoting all of human cognition and physicality except for the most abstract and least ambiguous aspects of language, the one thing we can do which is partially tolerant of timing uncertainty. It is only barely possible, but endlessly glitchy and compromising, to build Virtual Reality or other intimate conceptions of digital instrumentation (meaning those connected with the human sensory motor loop rather than abstractions mediated by language) using architectures like UNIX or Linux. But the horrible, limiting ideas of command line systems are now locked-in. We may never know what might have been. Software is like the movie “Groundhog Day,” in which each day is the same. The passage of time is trivialized.

—————-

But, as is often the case in space ranges, there is some very good stuff in here. The concept of the Antigora, i.e., a privately owned marketplace whose owner benefits both from its use by, and from the volunteer labor of, entrants is potentially quite useful.

I have a strong suspicion that Lanier’s use of Agora, and variations thereon, as his preferred term for one kind of marketplace and another, stems from the influence of the late Samuel Edward Konkin III (1947-2004), founder of a unique strain of California counter-cultural Libertarianism which he called Agorism, whose theories were promulgated via Sam’s own Agorist Institute. Potlatch metaphors were also a characterististic trope of Konkinian Libertarianism. One can hear the echo of Sam Konkin’s sunny optimism in the following analysis:

Perhaps it will turn out that India and China are vulnerable. Google and other Antigoras will increasingly lower the billing rates of help desks. Robots will probably start to work well just as China’s population is aging dramatically, in about twenty years. China and India might suddenly be out of work! Now we enter the endgame feared by the Luddites, in which technology becomes so efficient that there aren’t any more jobs for people.

But in this particular scenario, let’s say it also turns out to be true that even a person making a marginal income at the periphery of one of the Antigoras can survive, because the efficiencies make survival cheap. It’s 2025 in Cambodia, for instance, and you only make the equivalent of a buck a day, without health insurance, but the local Wal-Mart is cheaper every day and you can get a robot-designed robot to cut out your cancer for a quarter, so who cares? This is nothing but an extrapolation of the principle Wal-Mart is already demonstrating, according to some observers. Efficiencies concentrate wealth, and make the poor poorer by some relative measures, but their expenses are also brought down by the efficiencies.

—————-

An amusing read and a fine provocation. John Perry Barlow, Eric S. Raymond, David Gelernter, and Glenn Reynolds will all be replying.

—————-

Hat tip to Glenn Reynolds.